Fixed Pay

What is fixed pay?

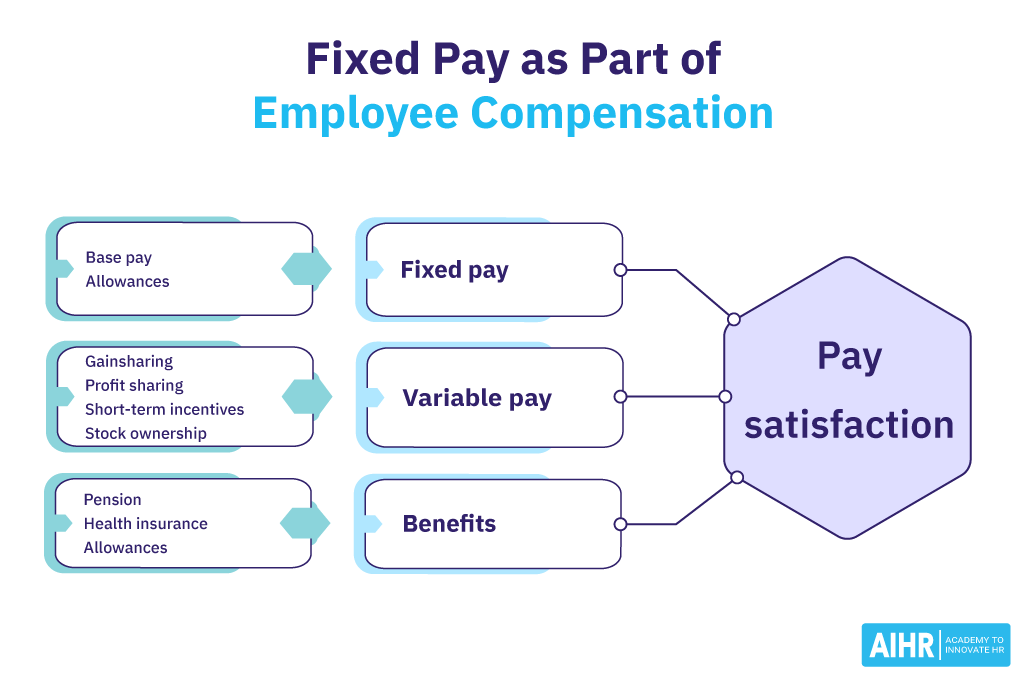

Fixed pay, or fixed salary, is the predefined and fixed amount paid to an employee by the employer at the end of every payroll cycle. Fixed pay includes all remuneration guaranteed by the company, most commonly in the form of a monthly or annual salary.

Additional contributions to things like medical insurance, a retirement fund, or allowances (car, house, etc.) may or may not be included in fixed pay, depending on the company policy and/or employment agreement.

The term ‘fixed’ indicates the same amount is paid to an employee on a regular basis, irrespective of hours worked or the quality of work performed.

Fixed pay vs. variable pay

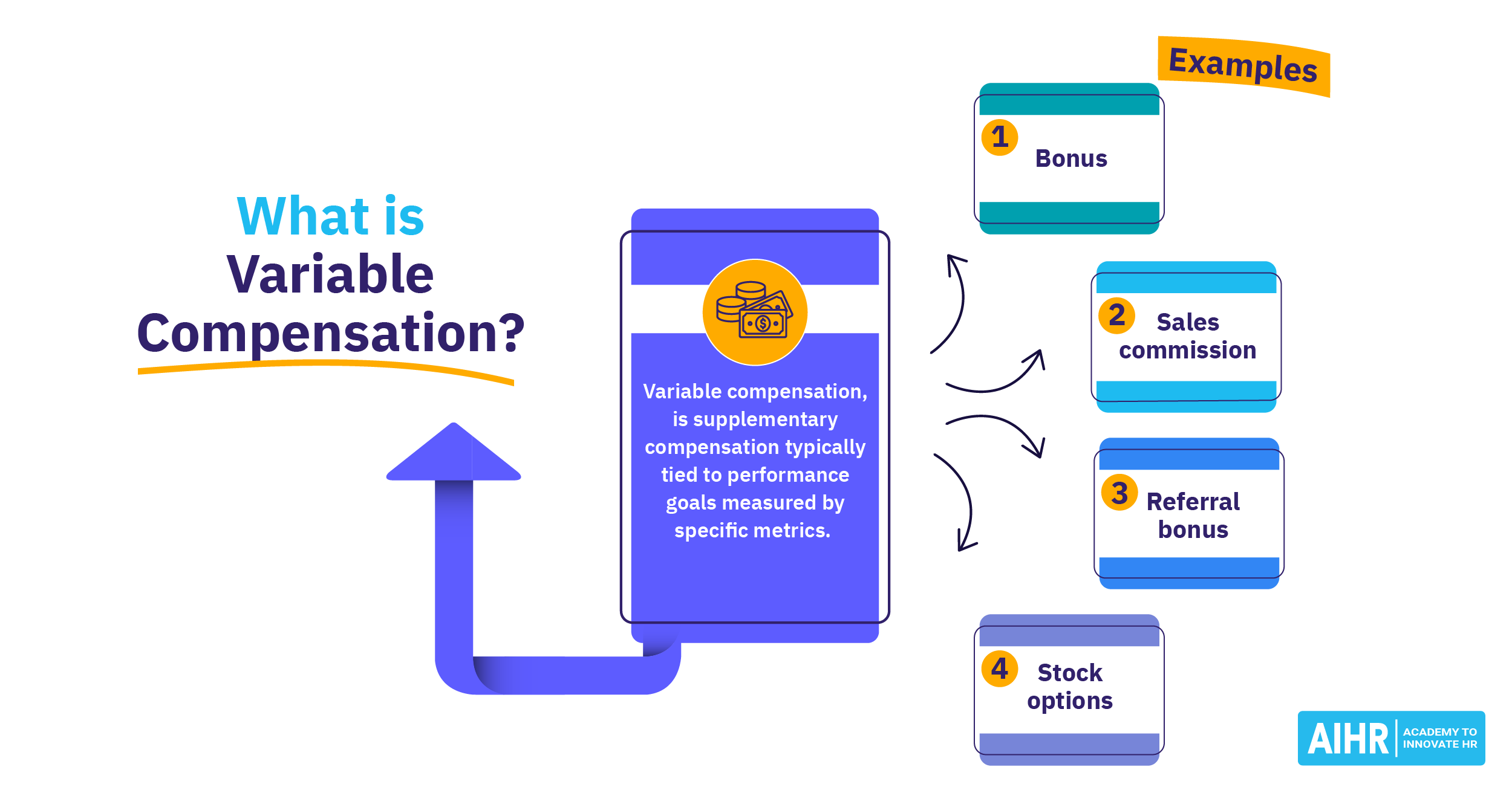

Variable pay is remuneration determined by employee performance. It is offered in addition to the employee’s fixed pay. Performance bonuses, sales commissions, referral bonuses, and profit-sharing are some examples of variable pay.

The term ‘variable’ indicates that the amount paid to an employee can change depending on the employee or company’s performance.

Example 1:

A manager is paid a salary of $2,000 every month. Included in the manager’s contract is a fixed housing allowance of $200 per month. These two regular payments comprise a fixed salary.

At the end of the year, the manager is paid a $4,500 bonus. The bonus is considered variable pay.

Example 2:

The same manager moves to a new city and has asked to work reduced hours. As a result, the company decides to switch the manager to earning an hourly wage.

The manager logs 32 hours in one month and 45 hours in the following month. The manager is also awarded a two-month allowance to help relocate to a new city. In addition, the manager is paid a sales commission of $450 for closing key deals for the company. All components of his pay, in this case, are considered variable pay.

Fixed pay vs. basic pay

Fixed pay and basic pay are both components of an employee’s pay, but they differ in scope and purpose. Here’s a clear distinction between the two:

Definition

The guaranteed portion of an employee’s total salary, including allowances and benefits, but excluding variable components like bonuses.

The core salary component, forming the basis for calculating other pay elements like allowances and benefits.

Components

Includes basic salary plus fixed allowances like housing, transport, etc.

Does not include allowances, benefits, or bonuses.

Variability

Remains fixed unless components like allowances are adjusted.

Remains constant unless revised during salary reviews.

Example

$5,000 per month, including $3,000 basic + $2,000 allowances.

$3,000 per month (no extras).

Advantages and disadvantages of fixed pay

Advantages

- Budgeting ease: An organization can forecast and budget better, as there is not much change in employees’ fixed salaries on a month-to-month basis. This also allows employees to manage their finances better every month, as they know the amount in advance.

- Employee security: Some employees prefer to be guaranteed a fixed monthly amount. This can have a positive effect on employee retention and satisfaction rates.

- Simplified payroll management: Fixed salary reduces administrative complexity, as there are fewer calculations and adjustments compared to performance-based or variable pay systems.

- Alignment with organizational goals: Fixed pay works well for roles where performance is hard to quantify or where collaboration and consistent contribution are more important than individual performance metrics.

Disadvantages

- Risk of complacency: Employees might become complacent, focusing only on fulfilling basic job requirements without striving for improvement or innovation.

- Difficulty in managing underperformance: Employers must pay the agreed salary regardless of an employee’s output or contribution, which can result in paying underperforming employees the same as high achievers.

- Missed cost flexibility: Fixed pay creates rigid cost structures, making it harder for employers to adjust expenses in response to financial challenges or changes in business performance.

- Challenges in retaining top talent: High-performing employees might seek opportunities elsewhere if they feel their efforts are not adequately rewarded compared to more performance-based structures.

FAQ

Fixed pay is the guaranteed portion of an employee’s salary, including basic pay, allowances (e.g., housing, transport), and fixed benefits, excluding bonuses or performance-based components. It ensures a consistent income regardless of variable factors.

An example of fixed pay is a set monthly salary, such as $5,000, that is paid regardless of hours worked or company performance.

Fixed pay is the guaranteed part of an employee’s salary, including basic pay and allowances, providing consistent income. Variable pay, such as bonuses, incentives, or commissions, depends on performance or company results and can fluctuate.